Aline Lerner

More posts from Aline Lerner

Technical interviews are a black box — candidates usually are told whether they made it to the next round, but they rarely find out why.

Lack of feedback isn’t just frustrating for candidates; it’s also bad for business. Our research shows that 43% of all candidates consistently underrate their technical interview performance, and 25% of all candidates consistently think they failed when they actually passed.

Why do these numbers matter? Because giving instant feedback to successful candidates can do wonders for increasing your close rate.

Giving feedback will not only make candidates you want today more likely to join your team, but it’s also crucial to hiring the people you might want down the road. Technical interview outcomes are erratic, and according to our data, only about 25% of candidates perform consistently from interview to interview.

This means a candidate you reject today might be someone you want to hire in 6 months.

But won’t we get sued?

I surveyed founders, hiring managers, recruiters and labor lawyers to understand why anyone who’s ever gone through interviewer training has been told in no uncertain terms to not give feedback.

The main reason: Companies are scared of being sued.

As it turns out, literally zero companies (at least in the U.S.) have ever been sued by an engineer who received constructive post-interview feedback.

A lot of cases are settled out of court, which makes that data much harder to get, but given what we know, the odds of being sued after giving useful feedback are extremely low.

What about candidates getting defensive?

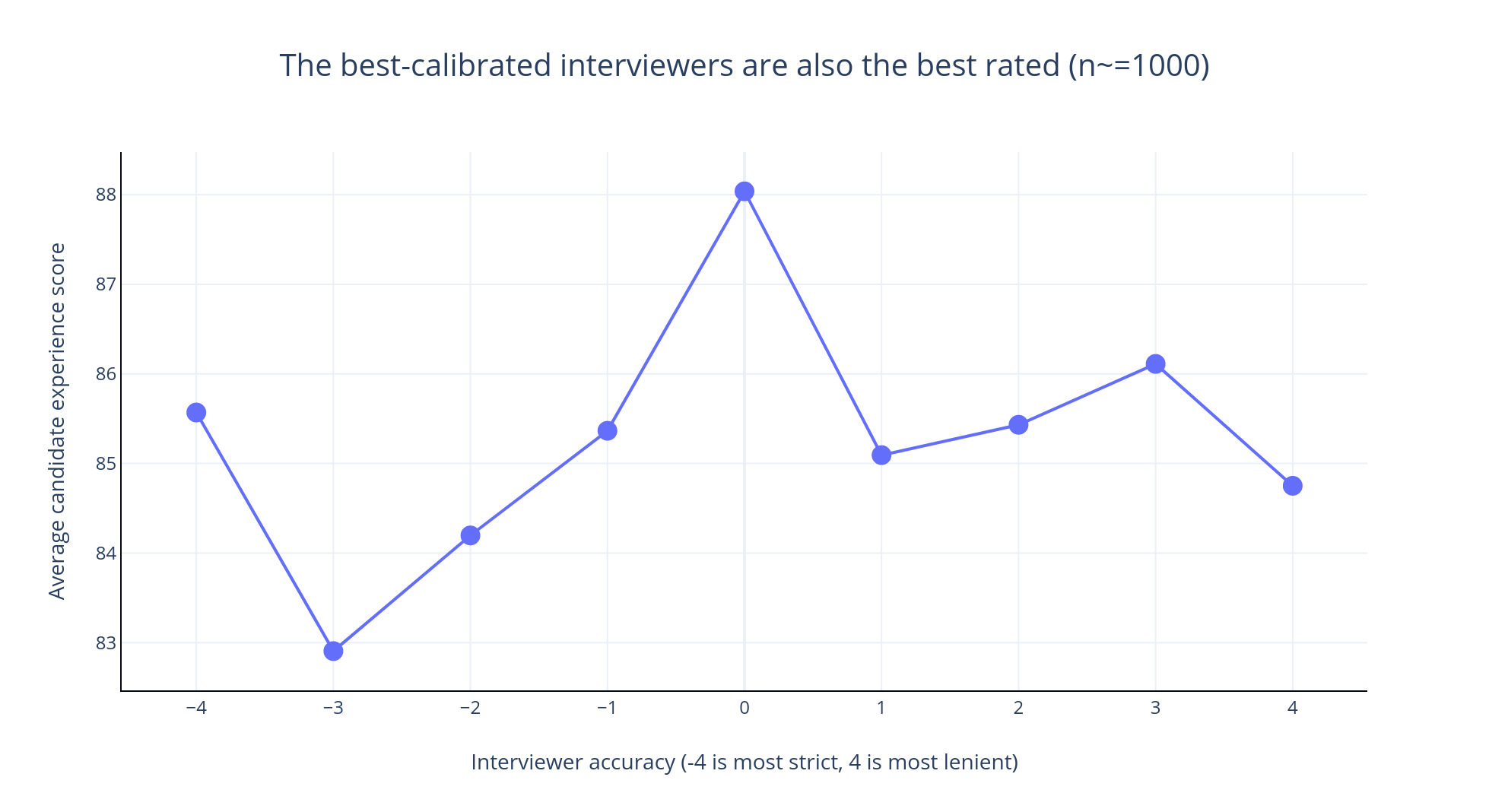

For every interviewer on our platform, we track two key metrics: candidate experience and interviewer calibration.

The candidate experience score is a measure of how likely someone is to return after talking to a given interviewer. The interviewer calibration score tells us whether a given interviewer is too strict or too lenient, based on how well their candidates do in subsequent, real interviews. If someone continually gives good scores to candidates who fail real interviews, they’re too lenient, and vice versa.

When you put these scores together, you can reason about the value of delivering honest feedback. Below is a graph of the average candidate experience score as a function of interviewer accuracy, representing data from over 1,000 distinct interviewers (comprising about 100,000 interviews):

The candidate experience score peaks right at the point where interviewers are neither too strict nor too lenient, but are, in Goldilocks terms, “just right.” It drops off pretty dramatically on either side after that.

Based on our data, we’re confident that if you do it right, candidates won’t get defensive. The benefits of delivering honest feedback greatly outweigh the risks.

The playbook for delivering honest (and sometimes harsh) feedback

The first and most important thing is to not focus on the outcome. Rather, get specific right away — this keeps candidates from getting defensive and sets them up to actually hear and internalize feedback.

Don’t tell a candidate whether they did well or did poorly — just dive into a constructive, detailed performance assessment. Reframing feedback in this way takes some practice, but candidates won’t push you to give them the outcome.

Instead, their attention will be redirected to the details, which will make the pass/fail part much more of an afterthought (and, in some cases, entirely moot). People don’t get defensive because they failed — it’s because they don’t understand why and feel powerless.

Post-interview questions to consider

- Did they ask enough questions about constraints before getting into coding or before starting to design a system?

- Go over specific code snippets or portions of their solution — what could they have done better?

- Could their solution have been more efficient?

- Did they discuss and reason about trade-offs?

- Did they make mistakes when discussing time or space complexity? What were those mistakes?

- Did they make any mistakes when trying to use their programming language of choice idiomatically (e.g., iterating in Python or JavaScript)?

- For systems design questions, did they jump to suggesting a specific database, load balancer or tool without reasoning why that tool is the right choice for the job?

To answer these questions well and give constructive feedback, it’s critical to take time-stamped notes during the interview. You can always go back to your notes and say, “Hey, you jumped into coding just five minutes into the interview. Typically, you’ll want to spend a few minutes asking questions.”

Specific feedback really does mean being specific. One of the kindest, albeit most labor-intensive, things you can do is walk through their code, point out places where they went astray, and note what they could have done better.

Another useful approach is to share objective benchmarks for a given interview question, both with respect to time and the number of hints given. Skilled interviewers layer complexity: After a candidate successfully solves a question, they’ll change the constraints in real time. If a candidate is blowing through your questions quickly, you may even do this three or four times during the interview.

This means you know exactly how many constraint changes you’ll be able to go through with a low-performing candidate, a mediocre one and someone who’s truly exceptional.

Your candidates don’t know this, though. In fact, people commonly overestimate their performance in interviews because they don’t realize how many layers of complexity a question has.

In this scenario, a candidate may finish the first layer successfully right before time is called and finish believing they did well, when in reality, the interviewer has benchmarked them against people who completed three layers in the same amount of time.

How do you put all of this information to practical use?

Let your candidates know what the benchmarks are for a top-performing candidate at the end of the interview. You could say something like, “In the 45 minutes we spent working on this problem, the strongest performers usually complete the brute-force solution in about 20 minutes, optimize it until it runs in linear time (which takes another 10 minutes), and then, in the last 15 minutes, successfully complete an enhancement where, instead of an array, your input is a stream of integers.”

Also, let them know exactly how many hints are par for the course. Just like with how much time should elapse for different parts of the interview, candidates have no idea what “normal” is when it comes to the number and how detailed the hints are.

For instance, if a candidate needed a hint about which data structure to use, another about which time complexity is associated with that data structure, followed by a hint about a common off-by-one error that comes up, you may want to tell them that the strongest performers usually need a hint about one of those things, but not all three.

The key to communicating benchmarks constructively is, of course, to be as specific as possible with runtimes, space constraints or whatever success metric you’re using.

Some of our interviewers ask candidates to perform detailed self-assessments at the end of the interview before giving them feedback. This is an advanced technique, and if you’re new to giving synchronous feedback, I wouldn’t do it in your first few interviews.

However, once you become comfortable, this approach can be a great way to zero in on the areas that the candidate needs the most help on.

If you do end up taking the self-assessment route, it’s good to ask candidates some leading questions. For instance, for algorithmic interviews, you can ask:

- How well did you solve the problem and arrive at an optimized solution?

- How clean was your code?

- Where did you struggle?

While the candidate responds, take notes and then go through their points together, speaking to each point in detail. For instance, if a candidate rates themselves well on code quality but poorly on their ability to solve the problem, you can agree or disagree and give them benchmarks (as discussed above) for both.

In summary

- Take detailed notes during the interview, ideally with time stamps, that you can refer to later.

- Don’t lead with whether they passed or failed. Instead, be specific and constructive right away. This will divert the candidate’s attention away from the outcome and put them in the right headspace to receive feedback.

- As much as possible, give objective benchmarks for performance. For instance, tell candidates that the strongest performers are usually able to finish part 1 within 20 minutes, part 2 within 10 minutes, and part 3 within 15 minutes, with one hint at most.

- Once you become comfortable with giving feedback, you can try asking candidates to assess their own performance and then use it as a rubric that you can go down, point by point.

Comment