Research papers come out far too frequently for anyone to read them all. That’s especially true in the field of machine learning, which now affects (and produces papers in) practically every industry and company. This column aims to collect some of the more interesting recent discoveries and papers — particularly in, but not limited to, artificial intelligence — and explain why they matter.

This week we have a number of entries aimed at identifying or confirming bias or cheating behaviors in machine learning systems, or failures in the data that support them. But first a purely visually appealing project from the University of Washington being presented at the Conference on Computer Vision and Pattern Recognition.

They trained a system that recognizes and predicts the flow of water, clouds, smoke and other fluid features in photos, animating them from a single still image. The result is quite cool:

Why, though? Well, for one thing, the future of photography is code, and the better our cameras understand the world they’re pointed at, the better they can accommodate or recreate it. Fake river flow isn’t in high demand, but accurately predicting movement and the behavior of common photo features is.

An important question to answer in the creation and application of any machine learning system is whether it’s actually doing the thing you want it to. The history of “AI” is riddled with examples of models that found a way to look like they’re performing a task without actually doing it — sort of like a kid kicking everything under the bed when they’re supposed to clean their room.

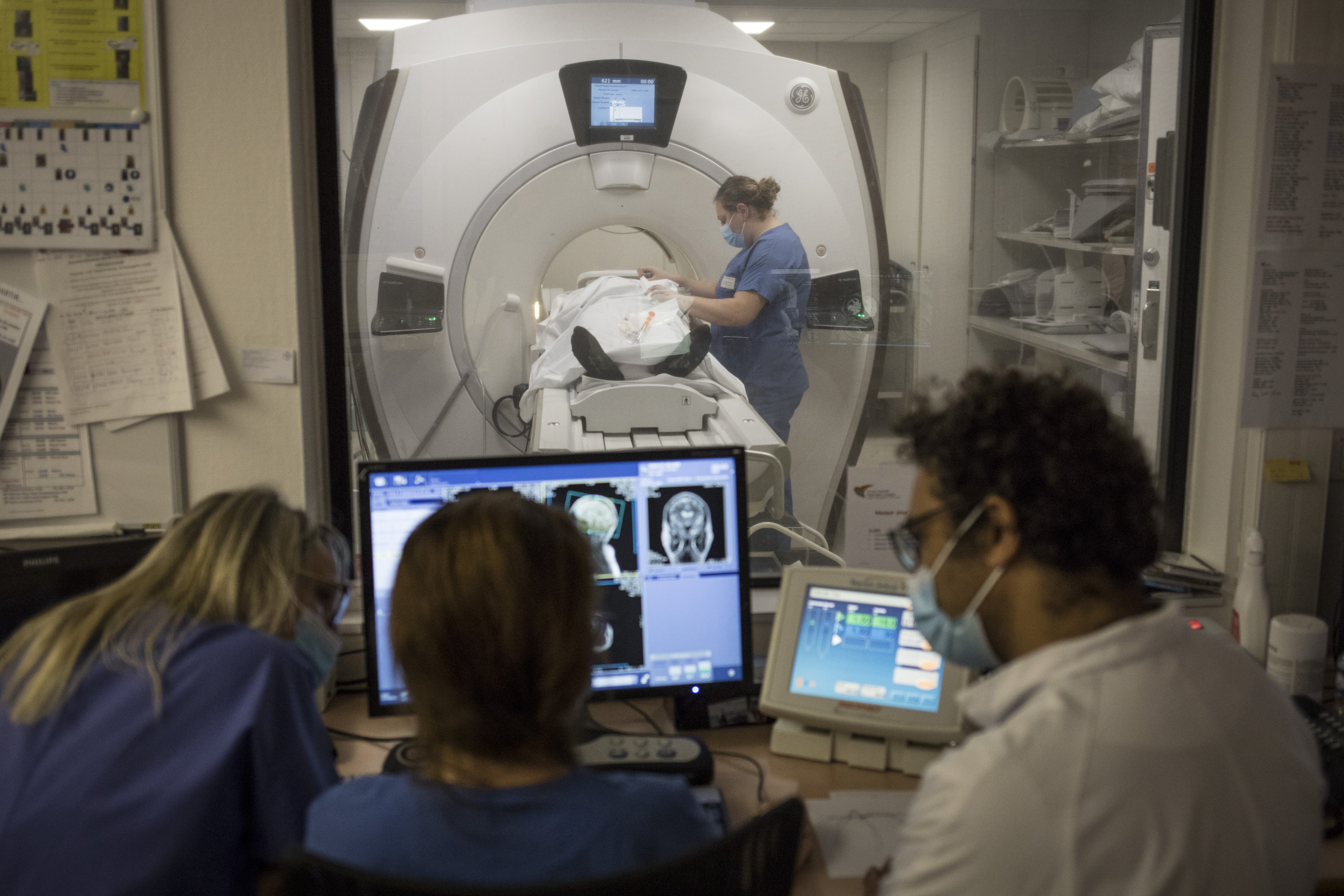

This is a serious problem in the medical field, where a system that’s faking it could have dire consequences. A study, also from UW, finds models proposed in the literature have a tendency to do this, in what the researchers call “shortcut learning.” These shortcuts could be simple — basing an X-ray’s risk on the patient’s demographics rather than the data in the image, for instance — or more unique, like relying heavily on conditions in the hospital its data is from, making it impossible to generalize to others.

The team found that many models basically failed when used on datasets that differed from their training ones. They hope that advances in machine learning transparency (opening the “black box”) will make it easier to tell when these systems are skirting the rules.

An example of the opposite can be found in climate modeling, which involves such complex systems that supercomputers can spin their bits for months just to simulate the movements of air and water in a tiny volume. Simplified models can be created by feeding the appropriate data into a machine learning system, which may, based on 23 hours of data, predict the 24th. But is the system actually modeling the climate factors, or just making statistically probable guesses at the outcomes?

A study started at the University of Reading had the happy outcome of finding, by looking into these systems very carefully, that they actually do what they claim to. “In some sense, it means the data-driven method is intelligent. It is not an emulator of data. It is a model that captures the dynamical processes. It is able to reconstruct what lies behind the data,” said co-author Valerio Lucarini.

Weather forecasts get an AI update with Atmo as businesses grapple with climate-related catastrophes

That kind of confidence will be useful in applications like this flood prediction project from Lancaster University, earlier versions of which suffered from the same lack of assurance. Professor Plamen Angelov is embarking on an improved flooding model that is not only faster and more accurate, but also explainable. You can probably expect this kind of “here’s how we know how we know” upgrade to become increasingly common wherever AI systems have the possibility of causing harm.

Some situations are not so easily quantifiable, such as an algorithm meant to detect whether a student is likely to drop out of college. There’s the opportunity for shortcuts here, too, if the system picks up on correlations that aren’t meaningful. Cornell researchers looked into whether including protected demographic information such as race, gender and income might affect these models, and found that, fortunately, they were not throwing off the estimates one way or the other. In fact, the team recommended including that data because it produces a more holistic view inclusive of these factors.

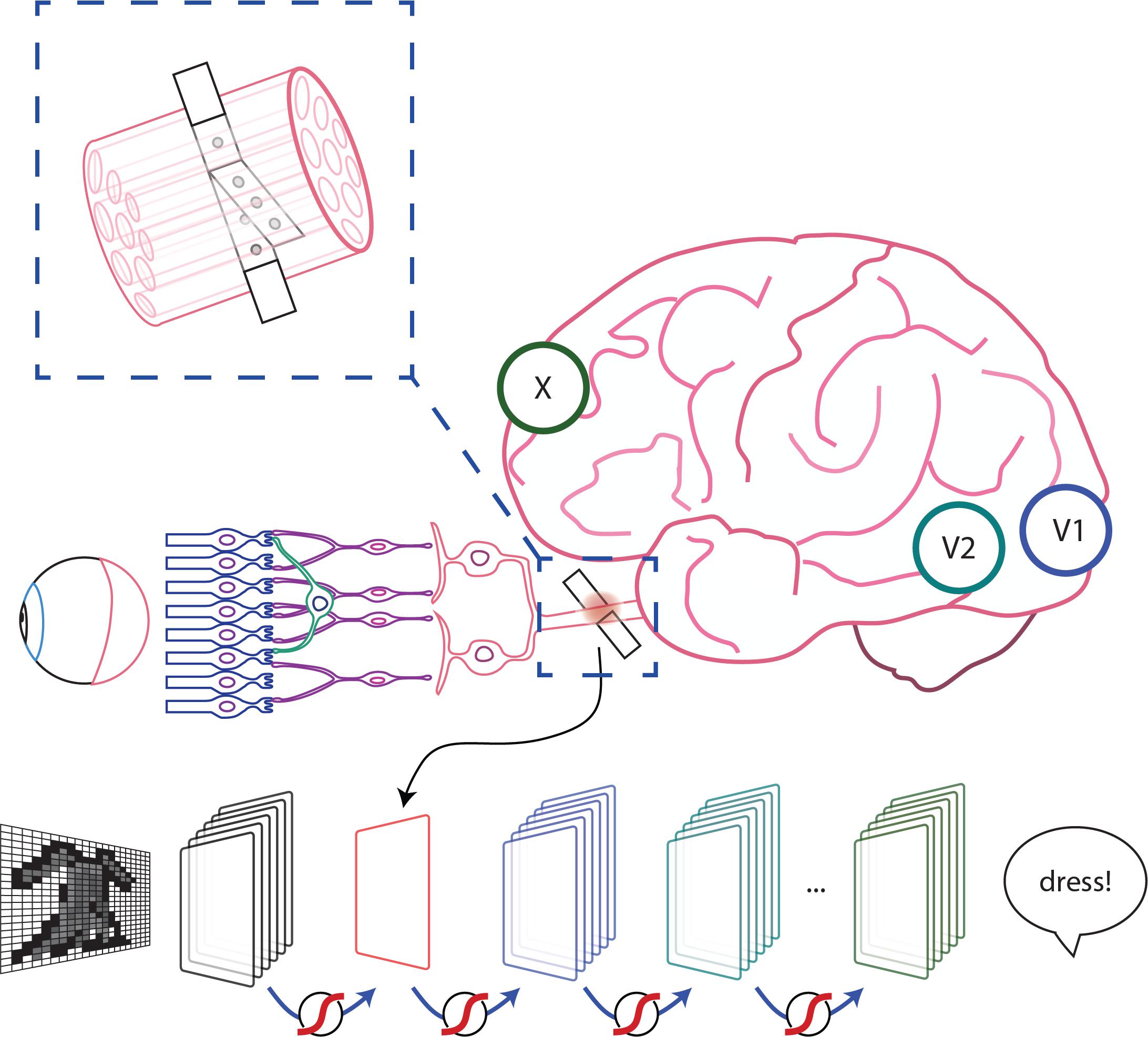

Simulating neural networks — that is, the ones in our heads — may seem like an obvious application of neural networks — that is, the ones in our computers — but it’s hardly as straightforward as it sounds. The latter are inspired by the former, but it doesn’t mean they’re naturally good at simulating them.

That said, networks of neurons in the brain can be monitored and their behavior predicted much as in any other complex system. That’s the hope EPFL researchers have in a new project aiming to build fundamentals for visual prosthetics by modeling how the visual cortex of a blind person reacts to certain stimuli. If it can be predicted well, potential users won’t have to be tested as frequently or invasively, since from some telltale early signs they should be able to simulate how it will adapt going forward.

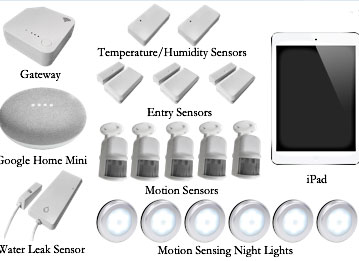

Folks aging into conditions like dementia require a lot of oversight, but there are rarely enough caregivers to provide it. Smart home devices and a touch of machine learning could help with that, though, suggests a recent study by researchers at UC Berkeley.

The homes of people suffering from dementia and other conditions were kitted out with sensors to tell when a faucet was left on, when someone was in bed, if a door is left open and so on, and this information was monitored closely to find a baseline of activity. Then, if the person deviates from that baseline, indicating an episode of confusion or physical distress, their caregiver can be alerted. It lessened worry in caregivers and adds a layer of responsive tech to the situation that can be flexibly applied. Dealing with the handful of low-data streams isn’t exactly a made-for-AI problem, but machine learning can help deploy and monitor these systems in a standard way.

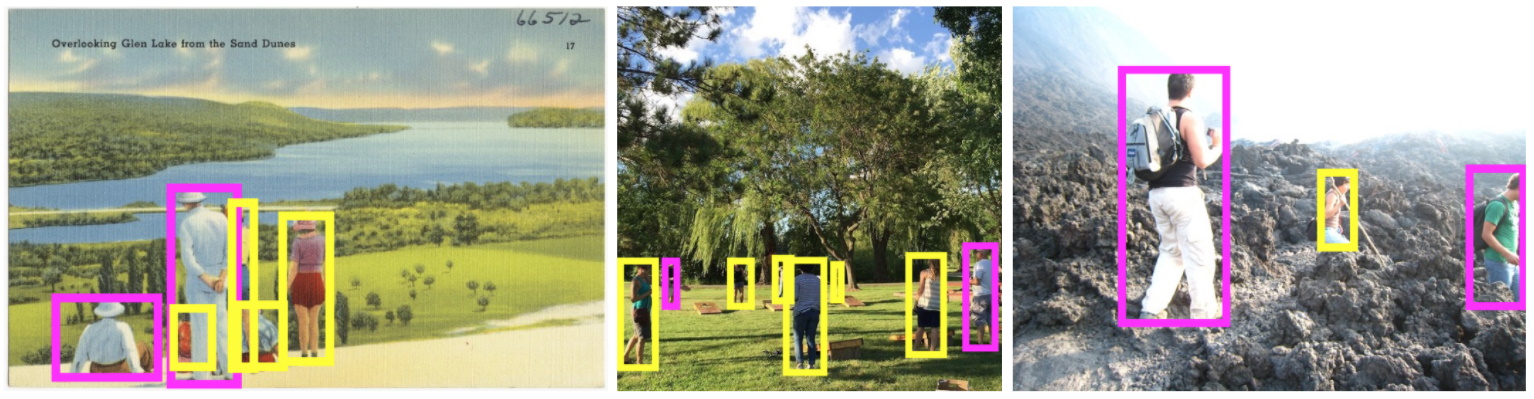

Older folks, among others, are better represented in a large image dataset from Google that a team revisited to look into fairness metrics. In the case of this dataset, comprising 9 million images of which 100,000 had people, that meant considering whether labels and bounding boxes were applied fairly and consistently. Turns out it wasn’t quite the case!

In a second pass at these labels, the team identified tens of thousands of new people in the photos, and updated how age and gender are represented. Instead of asking labelers to draw boxes around any “boy” or “woman” they see, they now box up any “person” and then add labels of their gender and age presentation as they perceive it. This more inclusive process is also more practical since it’s far more likely that a system will want to look for “people” and not just people with a certain gender presentation. If after a person is identified their age, gender or appearance matter for whatever reason, that data is over and above personhood.

As the researchers note, the resulting dataset is more inclusive and much better for it, streamlining processes and reducing the risk of siphoning human biases into ML systems.

Comment