Research papers come out at far too rapid a rate for anyone to read them all, especially in the field of machine learning, which now affects (and produces papers in) practically every industry and company. This column aims to collect the most relevant recent discoveries and papers, particularly in but not limited to artificial intelligence, and explain why they matter.

This week in Deep Science spans the stars all the way down to human anatomy, with research concerning exoplanets and Mars exploration, as well as understanding the subtlest habits and most hidden parts of the body.

Let’s proceed in order of distance from Earth. First is the confirmation of 50 new exoplanets by researchers at the University of Warwick. It’s important to distinguish this process from discovering exoplanets among the huge volumes of data collected by various satellites. These planets were flagged as candidates but no one has had the chance to say whether the data is conclusive. The team built on previous work that ranked planet candidates from least to most likely, creating a machine learning agent that could make precise statistical assessments and say with conviction, here is a planet.

“A prime example when the additional computational complexity of probabilistic methods pays off significantly,” said the university’s Theo Damoulas. It’s an excellent example of a field where marquee announcements, like the Google-powered discovery of Kepler-90 i, represent only the earliest results rather than a final destination, emphasizing the need for further study.

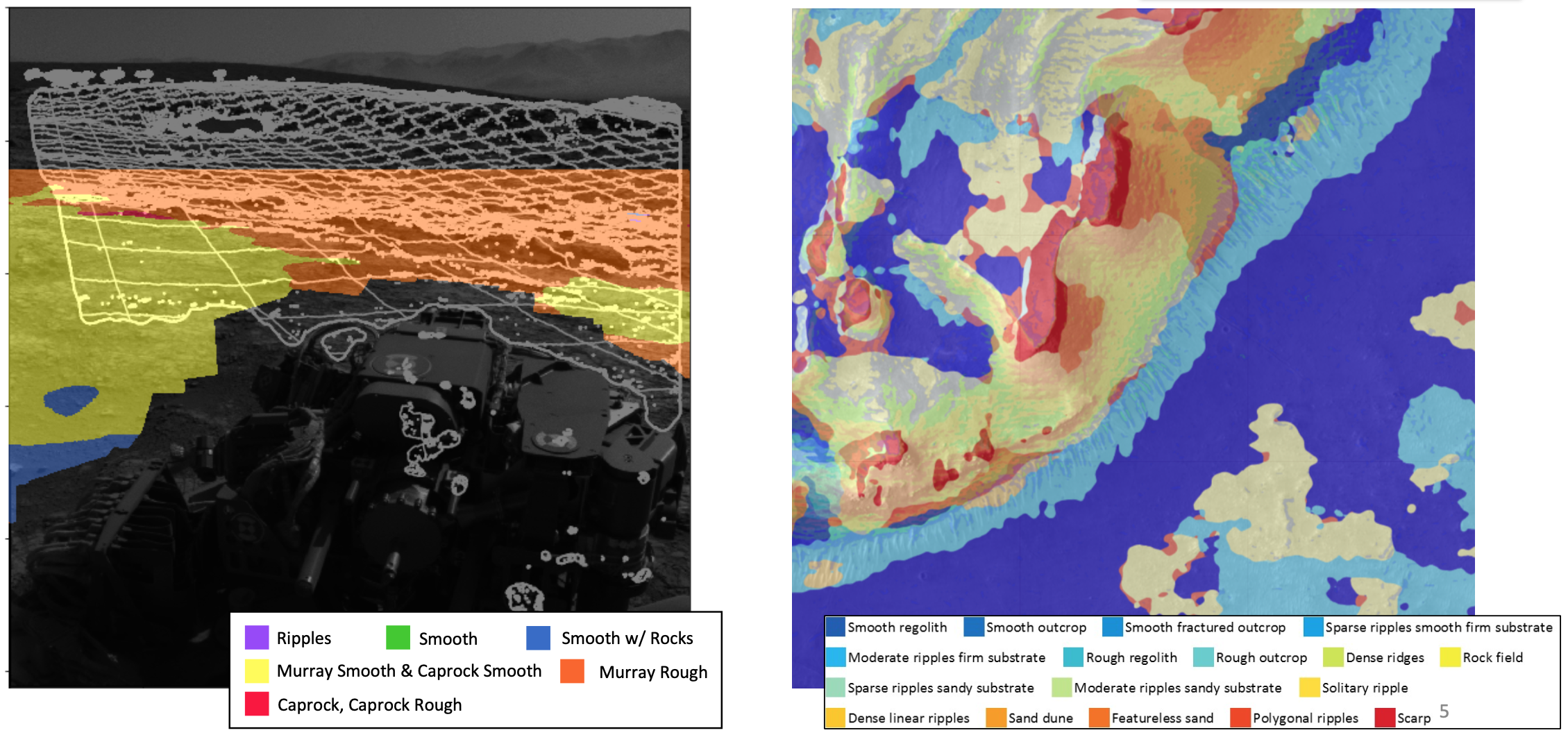

In our own solar system, we are getting to know our neighbor Mars quite well, though even the Perseverance rover, currently hurtling through the void in the direction of the red planet, is like its predecessors a very resource-limited platform. With a small power budget and years-old radiation-hardened CPUs, there’s only so much in the way of image analysis and other AI-type work it can do locally. But scientists are preparing for when a new generation of more powerful, efficient chips makes it to Mars.

Automatically classifying terrain, autonomously identifying and navigating to objects of interest, and local hosting and processing of scientific data are all on the table, as proposed by the Machine Learning-based Analytics for Autonomous Rover Systems (MAARS) program. Though the capabilities of a future rover may be orders of magnitude greater than what we have headed there now, efficiency and reliability will always be paramount — it’s the ultimate in edge deployment. You can even help train a Mars-bound navigation algorithm right now.

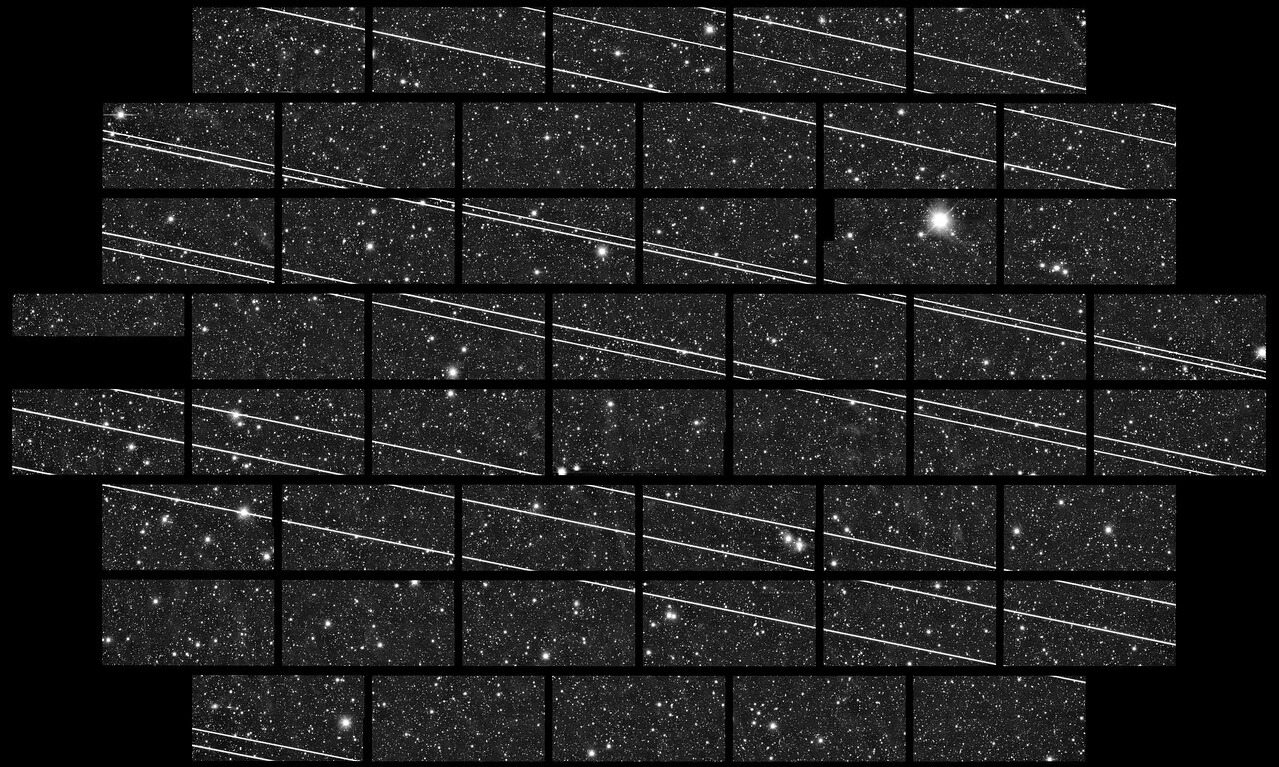

In orbit, the proliferation of communications satellites in constellations like SpaceX’s Starlink is leading to much worry on the part of astronomers, whose Earth-based telescopes must look past those pesky objects to observe the sky. A recent multiorganization study simulating a satellite-filled future night sky concludes that it will “fundamentally change astronomical observing,” and that “no combination of mitigations can fully avoid the impacts.”

Among the recommendations, software to “identify, model, subtract and mask satellite trails in images on the basis of user-supplied parameters” is foremost for observatories. This kind of task is highly suitable for ML agents, as we’ve seen in other digital media manipulation tools. I would be astonished if there were less than a dozen concurrent projects in private and public R&D to address this need, as it will be a persistent part of all astronomical observation going forward.

One more space-surface interaction we need to be aware of: Turns out quantum computers may be extremely sensitive to natural radiation, including the minute amounts emitted by metals in soil and of course those rascally cosmic rays. Just one more thing to isolate those fragile qubits from.

Another quick note for those of us here in the atmosphere: Berkeley National Lab tested a handful of consumer-grade air quality monitors to see if they actually do what they’re supposed to. Surprisingly, they do, but consistently overestimate the level of particulates in the air by as much as 2.4 times. That makes sense from a liability point of view — better to overreport than under.

The study suggests that a network of these cheaper sensors, while their readings are not to be relied on at face value, could prove an invaluable resource for tracking air quality trends across scales. If these devices are not already contributing to environmental and climate research, they should be and probably will be soon. But like other IoT devices, they’ll face privacy questions. The benefits of establishing clear rules and permissions for this kind of thing are becoming more clear by the day.

On the ground, the U.S. Army Research Lab has come up with an interesting way to promote a sort of symbiosis between humans and robots, each limited in their own way. A robot buddy traveling alongside a human can scan the environment more quickly and thoroughly than a person, but lacks the ability to tell whether changes it observes around it are important. The ARL and UC San Diego put together a system that watches for discrepancies in what its lidar systems detect, such as movement or a new or absent object, and highlights them in a heads-up display worn by a human. It skips the whole problem of “understanding” what’s happening by passing that on to a human, while leveraging the robot’s superiority in superficial sensing. This paradigm could be a very helpful one — and a relief to those rightly worried that robots aren’t really smart enough to make judgments like this.

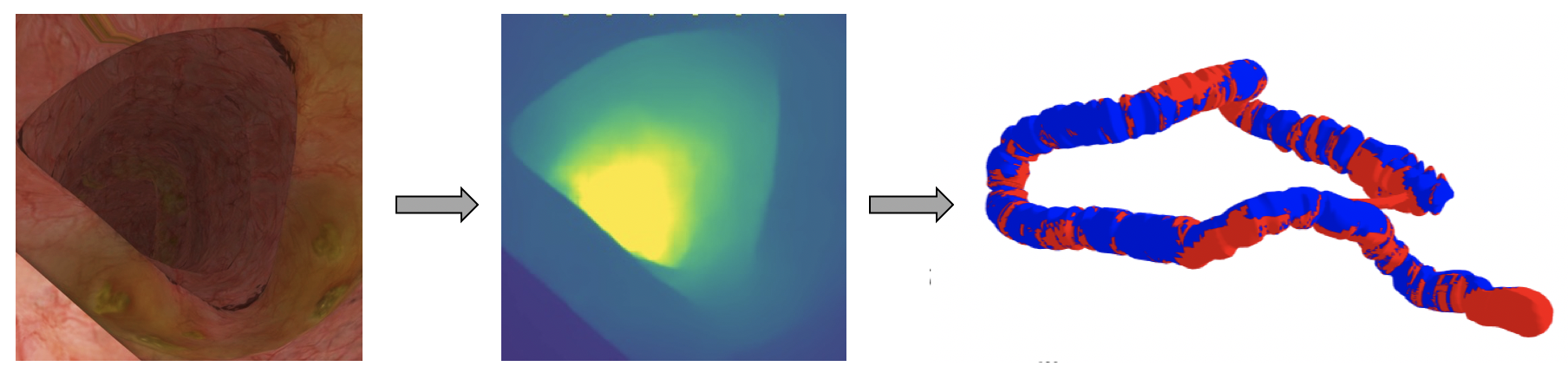

Lastly, some news inside the body that’s been made unfortunately timely by the tragic passing of Chadwick Boseman. Colorectal cancer is deadly and difficult to detect early, and a shortcoming of colonoscopies is it is difficult to say with certainty that the doctor has inspected every square inch of the tract. Google’s Colonoscopy Coverage Deficiency via Depth algorithm observes video of the procedure and builds a 3D map of the colon, noting as it goes which parts of it weren’t adequately visible to the camera.

Ideally the procedure could capture enough to be sure, but this would be a helpful tool to prevent against malpractice or just improve efficiency so that a second procedure isn’t necessary. Colorectal cancer is a major risk, especially for men, and furthermore especially for Black men, who tend to develop it earlier and more often. More and better tools may help detect it earlier and save thousands of lives.

ECCV

The European Conference on Computer Vision took place in late August and there are, as always, a lot of interesting papers that come out of it.

Facebook has a fun new database and tool called ContactPose, a collection of grips of everyday objects by a variety of people either “using” or “handing off” whatever it is. Gripping an object in an intelligent way is a remarkably difficult problem and the best source for how to do it is human technique.

ContactPose provides joint and hand poses for things like game controllers, cameras, bananas and sunglasses, showing contact heat maps and other information useful to a computer trying to figure out how to hold something. You can play around with it here.

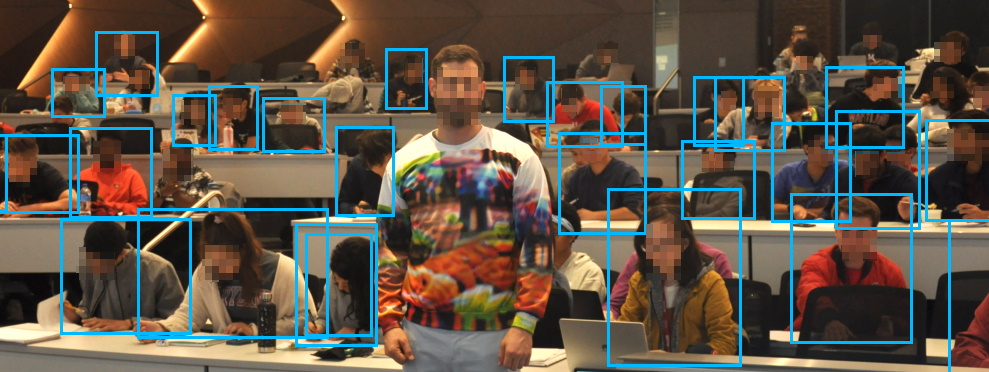

The company is also, predictably, concerned that tools used to identify and analyze individuals in photos may be disrupted somehow. We’ve seen studies that showed how taking advantage of a machine learning models’ biases can make a turtle be classified as a gun, and so on, but a harder problem is tricking the AI into thinking that there’s nothing instead of something.

This paper shows that it is indeed possible to engineer patterns that, worn or otherwise shown to a computer vision system, seem to confound it and make it think that the wearer is not a person but part of the background. The resulting clothing isn’t exactly haute couture but more attractive scrambler patterns are probably on the way.

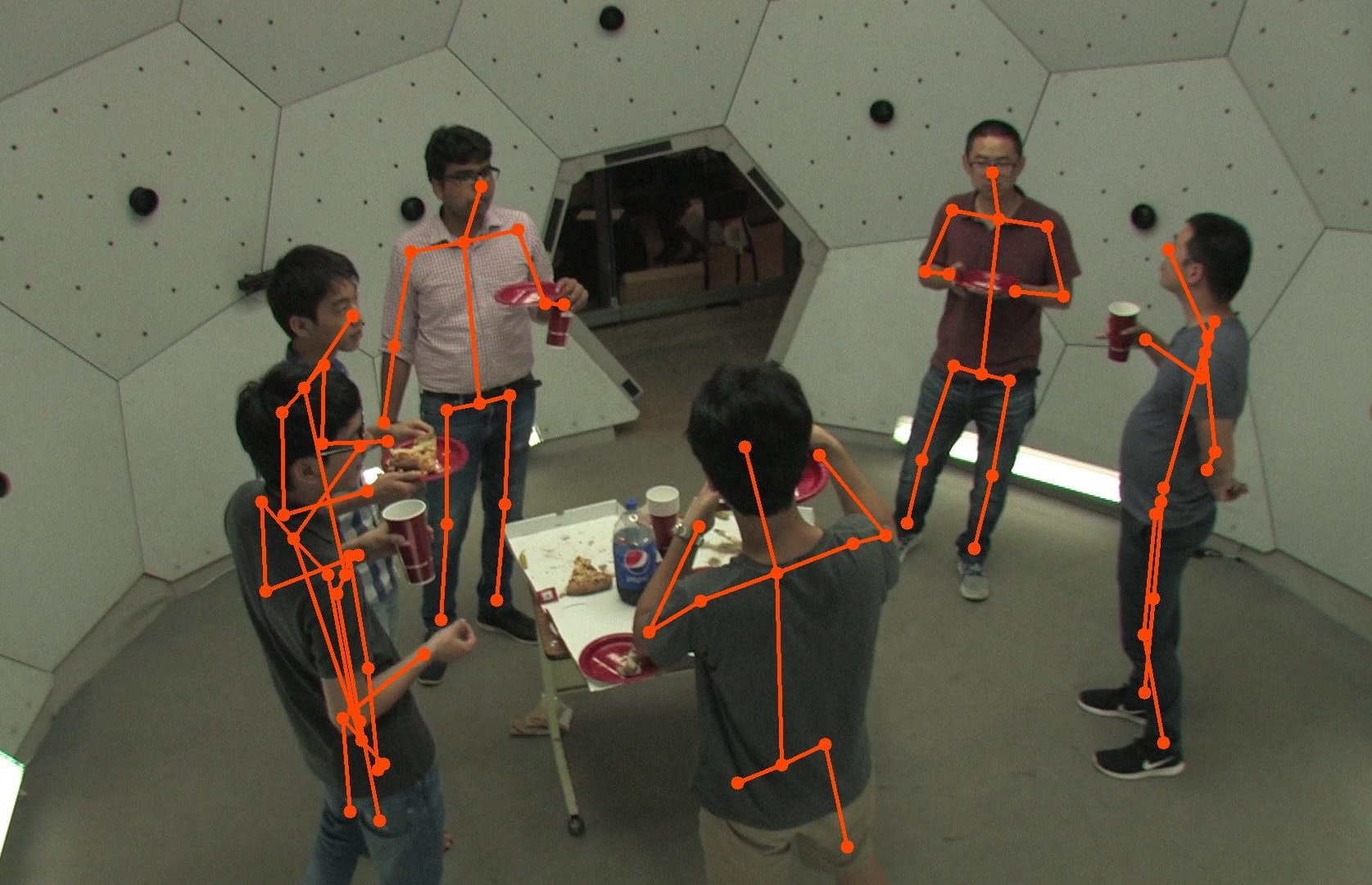

Microsoft is addressing an old favorite: estimating the positions of multiple people on camera. This kind of research goes back to the Kinect and while it never really took off in terms of gaming, it has proven useful in countless other ways.

This paper looks at a new way of identifying and analyzing the body positions of multiple people from the perspectives of multiple cameras simultaneously. It can be tough to figure that out from one 2D image, but with two or three of them it becomes a solvable problem — just a computationally complex one. But they’re confident in this approach, which will eventually be documented at this GitHub page.

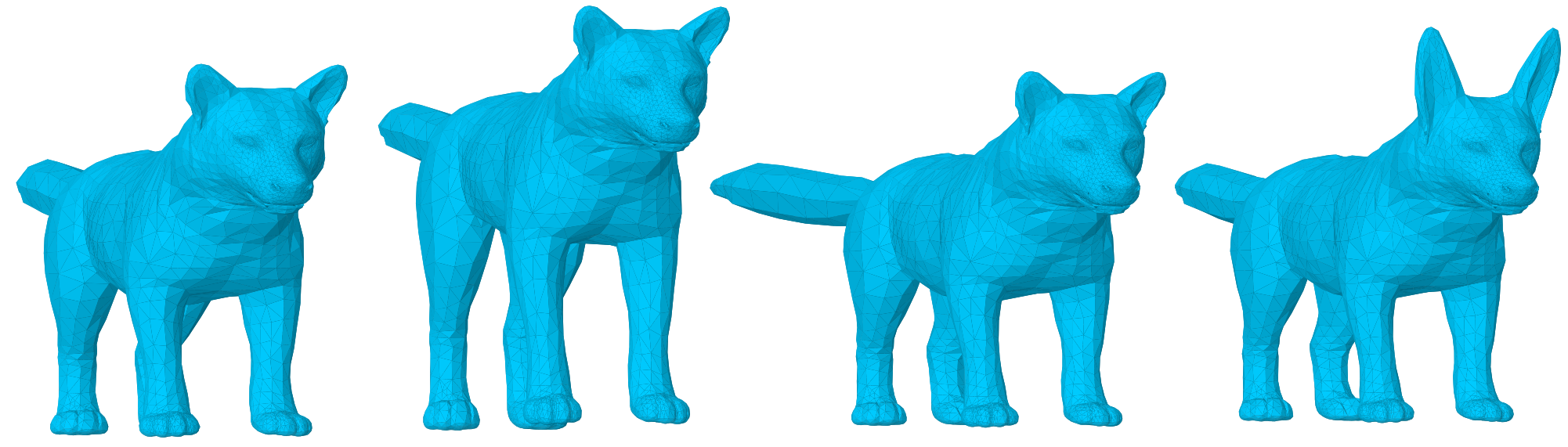

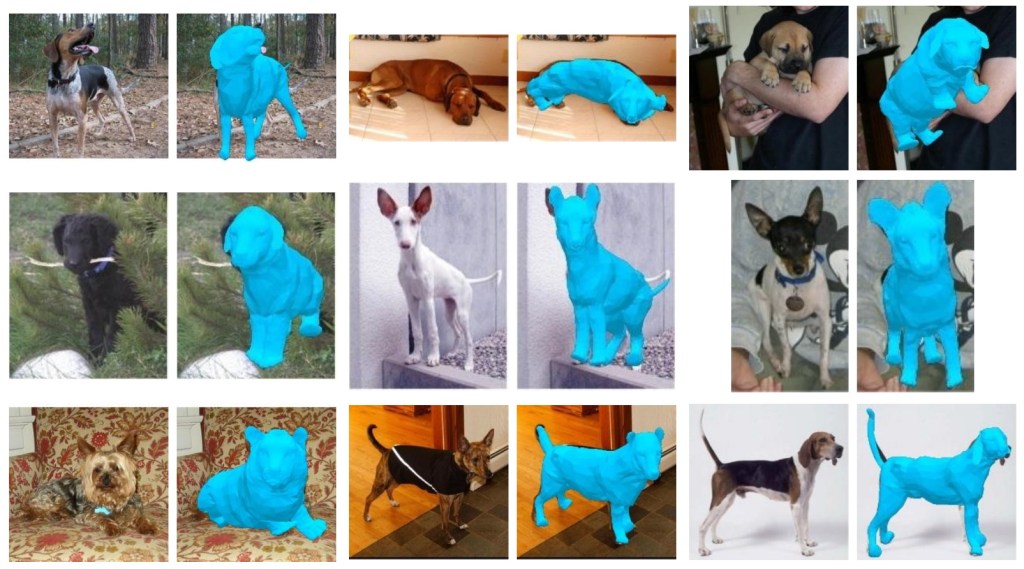

Just because a problem is a little wacky doesn’t mean it isn’t worth solving. Such is the case with this paper describing “end-to-end dog reconstruction from monocular images.” Maybe the utility of being able to tell the exact shape and position of a dog from a single image isn’t obvious to you. Or me. Indeed, perhaps there is no “utility” as the concept is commonly understood.

But think about it this way: Humans can recognize dogs instantly no matter how they’ve folded their furry bodies, or whether they’re a small dog with long floppy ears or a big one with pointy triangular ears. If computer vision systems are to meet or exceed the capabilities of humans, shouldn’t they at least be able to do that?

Seriously: Being able to identify an object (in this case an animal) despite that object having numerous unpredictable variations is a powerful and fundamental vision task, one we do every day almost automatically. Pursuing it as an abstract goal is an important line of inquiry and while “reconstructing a 3D dog mesh” won’t save any lives, it’s important basic research that happens to involve a lot of very good girls and boys.

Google’s ECCV spread had fewer obvious standouts, though this paper points to a feature I would appreciate and have secretly wished for from Maps: live shadows. Or not live exactly but reasonably accurate predictions. Using multiple images taken of the same location at street level, the team can create a good model of how the sun and other lights affect the scene, allowing them to change it arbitrarily for positions of the sun or sky conditions.

If this doesn’t end up in Google Maps within a year or two I’ll be very surprised. Having Street View reflect current weather patterns, or being able to tell whether a cafe is in the sun or shade at a given time on a given day is a hugely useful feature and the kind of wizardry the company loves to pack into one of the few products where it is truly still a leader. (Here’s a longer video on how it works.)

Another area it excels is computational photography, and a lot of its ECCV papers are the kind of thing that lead to products down the line there as well. Pose estimation, detection of objects and actions in videos, accelerating lidar analysis, that sort of thing. Anyone with a competing product could probably make a lot of informed speculation about their roadmap. But as few have more general interest, I’ll leave it to them.

Comment