Twenty-five years after the introduction of the World Wide Web, the Information Age is coming to an end. Thanks to mobile screens and Internet everywhere, we’re now entering what I call the “Experience Age.”

When was the last time you updated your Facebook status? Maybe you no longer do? It’s been reported that original status updates by Facebook’s 1.6 billion users are down 21 percent.

The status box is an icon of the Information Age, a period dominated by desktop computers and a company’s mission to organize all the world’s information. The icons of the Experience Age look much different, and are born from micro-computers, mobile sensors and high-speed connectivity.

The death of the status box is a small part of a larger shift away from information moving toward experience. What’s driving this shift? In short, the changing context of our online interactions, shaped by our connected devices.

You are not your profile

To illustrate how this is playing out, think of Facebook and Snapchat.

Facebook is an Information Age native. Along with other social networks of its generation, Facebook was built on a principle of the desktop era — accumulation.

Accumulation manifests in a digital profile where my identity is the sum of all the information I’ve saved — text, photos, videos, web pages. (Evan Spiegel explored this first in a 2015 YouTube video titled What is Snapchat?). In the Information Age we represented ourselves with this digital profile.

But mobile has changed how we view digital identity. With a connected camera televising our life in-the-moment, accumulated information takes a back seat to continual self-expression. The “virtual self” is becoming less evident. I may be the result of everything I’ve done, but I’m not the accumulation of it. Snapchat is native to this new reality.

Many people think Snapchat is all about secrecy, but the real innovation of Snapchat’s ephemeral messages isn’t that they self-destruct. It’s that they force us to break the accumulation habit we brought over from desktop computing. The result is that the profile is no longer the center of the social universe. In the Experience Age you are not a profile. You are simply you.

Show, don’t tell

The central idea of the Experience Age is this — I’ll show you my point of view, you give me your attention. I hear you yelling, “That’s always been the story of social!” And it has. But what’s changed is that the stories we tell each other now begin and end visually, making the narrative more literal than ever.

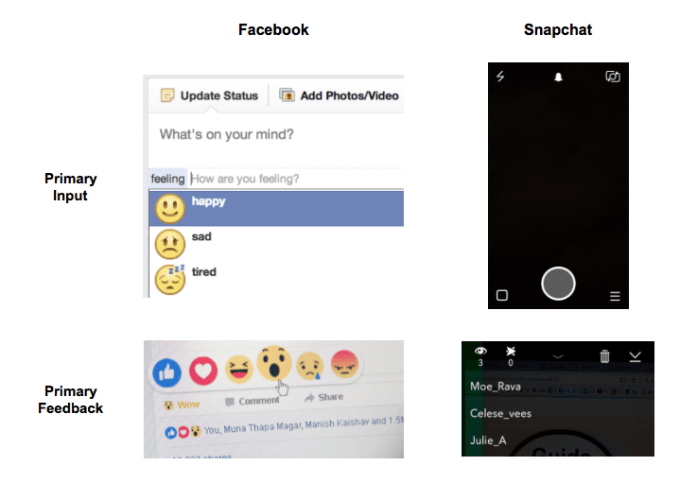

In the Information Age, the start of communication was information. On Facebook you type into a status box, add metadata such as your location and select from a hierarchy of emotions for how you feel. This information-first approach is also visible in Facebook’s feedback mechanisms — six pre-selected reactions with threaded commenting.

By contrast, Snapchat always starts with the camera. Feedback is sent passively — swiping up on your story reveals which friends watched your snaps. In the Experience Age, the primary input is visual and the dominant feedback is attention.

Today the feedback loop connecting sharing and attention starts and ends on mobile; in the future, it could start with contact lenses and end in VR, for example.

The experience stack

This reality frames Facebook’s recent investments, which bring live video, 360-degree cameras and VR as products all into a single portfolio. But Facebook isn’t the only tech giant looking ahead and seeing how all these technologies might line up. By now you’ve likely heard of Magic Leap, the super stealth AR startup with a $4.5 billion valuation funded by the likes of Google and Alibaba.

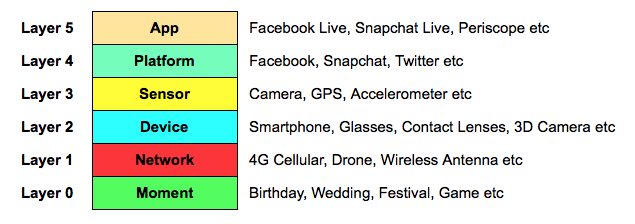

A global arms race is underway, and is beginning to create a layering of technologies I like to call the experience stack.

At the bottom is Layer 0, the real world. The full stack is in service of capturing and communicating real-world moments. Reality is its foundation.

As you move up, the layers transition from physical to logical. At the top is the application layer made up of products like Snapchat Live and Periscope. Tomorrow’s products will be even more immersive. Take for example the relaunch of Sean Parker’s Airtime and Magic Leap’s A New Morning.

The experience stack will drive new products to market faster as each layer can grow independently, while at the same time benefiting from advancements in the layers below. An example of this phenomenon is high-speed 3G enabling Apple’s App Store, which together advanced mobile as a whole. The best products of the Experience Age will be timely new applications that leverage step-change advancements in bottom layers. Given that some layers are still nascent, tremendous opportunity is ahead.

Our online and offline identities are converging, the stories we tell each other now start and end visually and investments at every layer of a new stack are accelerating the development of experience-driven products. Taken together, these trends have cracked open the door for a new golden age of technology.

It’s an exciting time to be building.

Comment